The Legal AI Engine Built to (Actually) Understand Contracts

Most legal AI tools fall apart under real legal pressure. Here’s why Syntracts stands apart.

TL;DR: Legal AI is booming, but most tools fall apart under real legal pressure. Here’s why Syntracts stands apart:

- Most “legal AI” is just a chatbot in disguise — fragile, generic, and risky

- Syntracts is an API-first infrastructure layer built from the ground up for on-prem, secure, and precise legal work

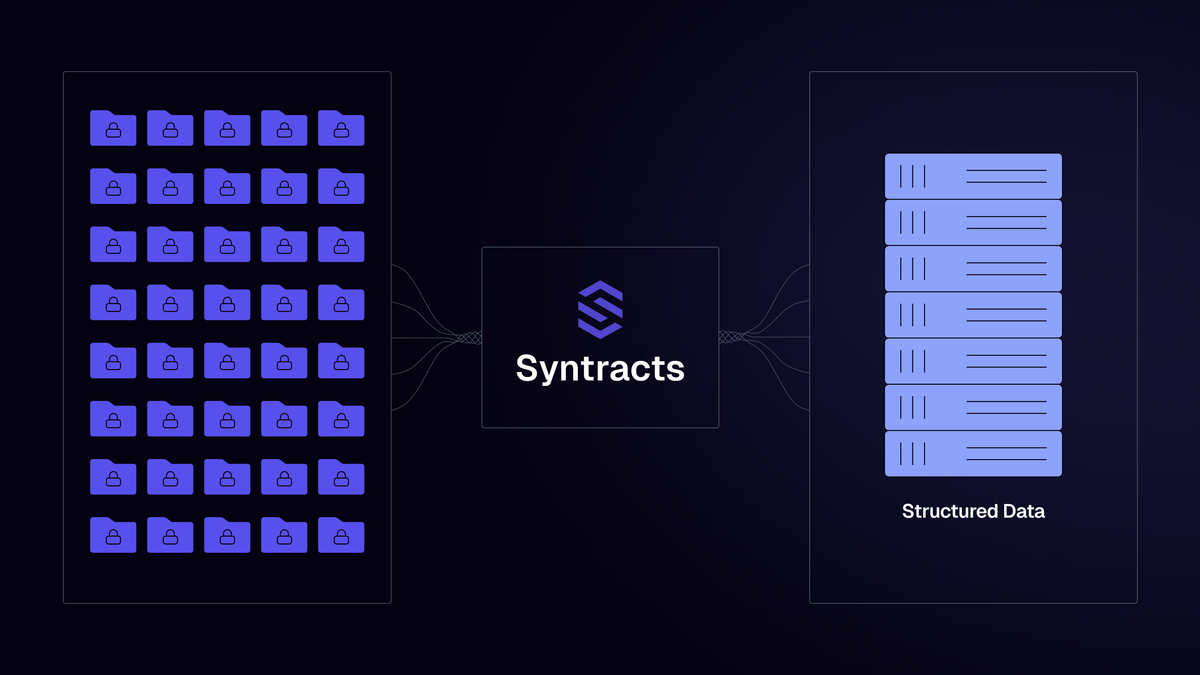

- Syntracts doesn’t summarize contracts — it understands them, extracting structured, usable data that improves the AI tools and systems your firm already uses

- When the stakes are legal, “good enough” isn’t good enough

Why Most Legal AI Isn’t Built for Legal Work

Legal AI is having its breakout moment. But beneath the flashy demos and slick chat interfaces, most tools aren’t equipped for the rigor, precision, and accountability that real legal work demands. They rely on unstable foundations and introduce more risk than relief.

Syntracts is different. It doesn’t “apply AI” to legal work. It was built from scratch to do legal work securely, scalably, and with the depth and nuance that contracts demand. This isn’t AI wrapped in legal branding. This is legal infrastructure, powered by AI.

The Legal AI Hype Cycle: What Most Tools Get Wrong

The past year has seen a meteoric rise in legal AI interest. More than 70% of law firms have reported testing AI tools, but only 40% have deployed them into everyday workflows. Why the gap? Most legal AI tools are optimized for pitch decks, not practice.

Beneath the polished UIs, these offerings often boil down to little more than wrappers around large language models (LLMs). They rely on brittle prompt chains, cloud APIs, and generic models trained far afield from actual legal complexity. These tools can generate convincing contract summaries until the stakes get real. When latency, hallucinated outputs, or unsecured data flows enter the equation, “good enough” quickly becomes unacceptable.

The Problem: Legal AI That Misses the Fine Print

The legal AI space is crowded with lookalike solutions—products that wear the uniform of legal tech but are powered by the same underlying engines as consumer chatbots. Many of today’s biggest names in legal AI simply layer chat interfaces over general-purpose LLMs. Behind the scenes, these tools string together a series of prompts designed to simulate legal workflows, not understand them.

Common breakdowns include:

- Misinterpreting conditional clauses, like who holds a termination right or when notice is required

- Omitting qualifiers that radically change the meaning of a provision

- Reducing structured obligations to vague summaries

This isn’t just a technical issue, it’s a legal liability. A missed term or misread clause can derail a negotiation, breach a client’s trust, or compromise a firm’s compliance obligations.

The Stakes Are Too High for Guesswork

Legal work is not a domain for approximations. A missed clause isn’t a UX bug—it’s a risk to the client, the deal, and the firm’s reputation. In contract review, the difference between “may” and “shall” can be millions of dollars.

- 55% of legal professionals list data privacy as their #1 barrier to AI adoption

- Most tools depend on third-party APIs, exposing firms to vendor risk and confidentiality breaches

- Even small hallucinations can lead to massive downstream risk in litigation, dealmaking, or compliance

In law, quality isn’t a feature. It’s a foundation.

The Syntracts Stack: Purpose-Built for Legal Precision

Syntracts doesn’t just “apply AI” to legal workflows—it was designed from the ground up to meet legal standards, not just tech demos.

Here’s what makes us different:

- API-First: Your data shouldn't be trapped in a new app or silo. Syntracts acts as an API-first infrastructure layer, so your information can move freely.

- Purpose-Built Intelligence: No black-box APIs, no third-party models. Syntracts leverages thousands of specialized small language models, each an expert in its own particular area of a contract or document, trained on synthetic legal data, not internet text.

- On-Prem Deployment: Full control from day one — no external API calls, no data leaving the building.

- Structured Outputs: Syntracts doesn’t guess at meaning. It extracts obligations, terms, and provisions into structured, usable data — perfect for benchmarking, compliance, or risk analysis.

- Supercharge existing AI: Your firms specific, structured data is fed into the AI systems and assistants that you already use to reduce hallucinations and increase precision.

Syntracts is a legal-grade engine designed to deliver clarity, not conversations.

Why Prompt-Stacked Chatbots Can’t Keep Up

Ask a chatbot to summarize a contract, and it will give you something plausible. Ask it the same question a different way, and you might get something completely different. That’s not intelligence, it’s instability.

Most of today’s “legal AI” tools aren’t legal at all. They’re consumer-grade chat interfaces dressed up for the enterprise. Behind the curtain, they rely on long chains of prompts, glued together to simulate legal workflows. Each of those prompts introduces another point of failure. Misplace one assumption or tweak one phrase, and the entire output can shift.

This fragility shows up fast in legal use cases:

- Outputs change based on how a question is phrased

- Clause interpretation varies from contract to contract

- Summaries gloss over critical obligations

- Prompt stacks break when workflows shift or scale

These tools don’t actually understand contracts, and when legal nuance is flattened into chatbot conversation, firms take on risks they can’t afford.

Built for Legal. Not Just Branded Legal.

In a sea of tools that claim to be legal AI, Syntracts is one of the few that is.

We turn legal complexity into structured intelligence. Whether it’s thousands of contracts, regulatory audits, or vendor risk, we help legal teams act faster, stay compliant, and scale with confidence.

Ready to see purpose-built legal AI in action?

Request a demo and discover what structured legal intelligence looks like at scale.